6/26/2025

Published on Convert on 26/06/2025.

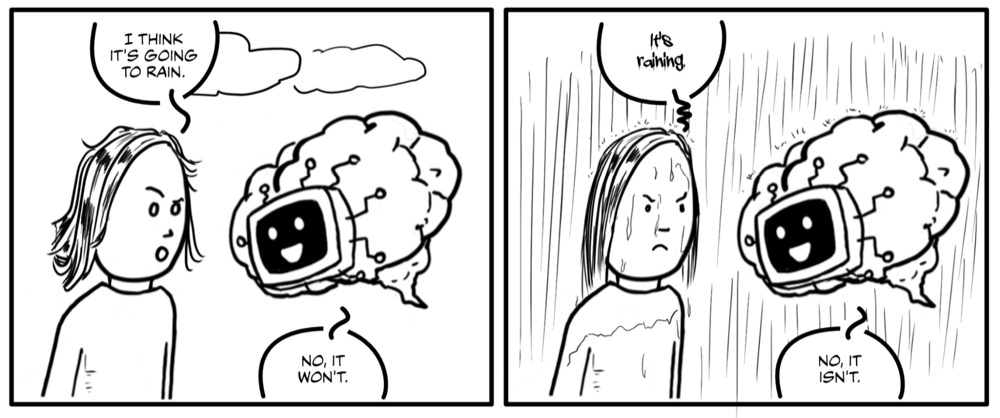

When AI invents or twists facts, trust and decisions suffer. This guide explains the main types of errors and four ways to manage them.

Types of errors:

- Factual (wrong facts)

- Faithfulness (misrepresenting what the source said)

- Logic (invalid inferences)

- Fabrication (inventing things)

Why they happen: LLMs use pattern matching, not “truth.” Limits in data, how the model is tuned, and our own biases in prompts or interpretation all play a role.

Four ways to manage hallucinations:

- Validation: Before you prompt, decide how you’ll check the answer (e.g. find the primary source for a stat, or ask for a quote and check it in the raw data).

- Better prompting: Direct the model’s attention; see the author’s article on precision prompting.

- Self-consistency: Run the same prompt several times (or across models) and compare; inconsistencies can reveal hallucinations.

- Bias check: Ask the AI to review the conversation and point out confirmation bias or leading questions.

The full article links these to interaction patterns and human-in-the-loop.