9/10/2025

Published on Convert on 10/09/2025.

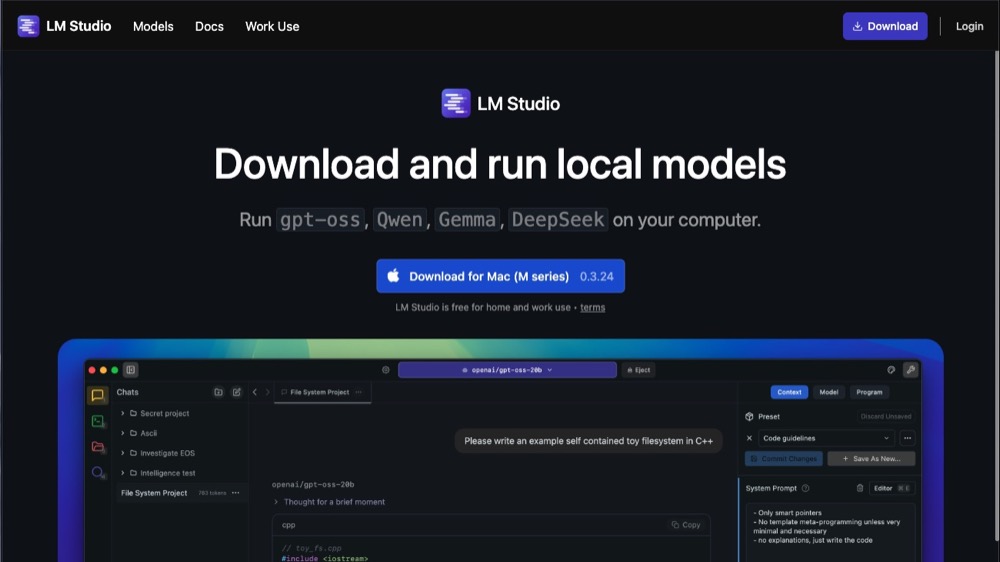

Running smaller models locally can mean better privacy, sustainability, and control. This guide uses LM Studio to discover and run them.

- LM Studio: Detects your hardware and suggests models that fit. You get a familiar chat layout plus a Discover tab for models.

- What to check: Hardware (e.g. RAM, GPU), model format (GGUF, MLX for Apple), size (params and “full GPU offload”), and what the model is good at.

- Real task: Analyse messy user feedback and pull out insights. The article uses a one-shot prompt and a “perfect” reference output.

- Comparison: Same prompt in ChatGPT (baseline), then in LM Studio with the 0.6B model (fast, few insights) and the 1.7B model (much closer to ChatGPT).

Conclusion: small models are practical and fun to explore. Part 2 pushes the 0.6B model further with breakpoints and better prompting.