5/11/2020

Published in UX Collective (uxdesign.cc).

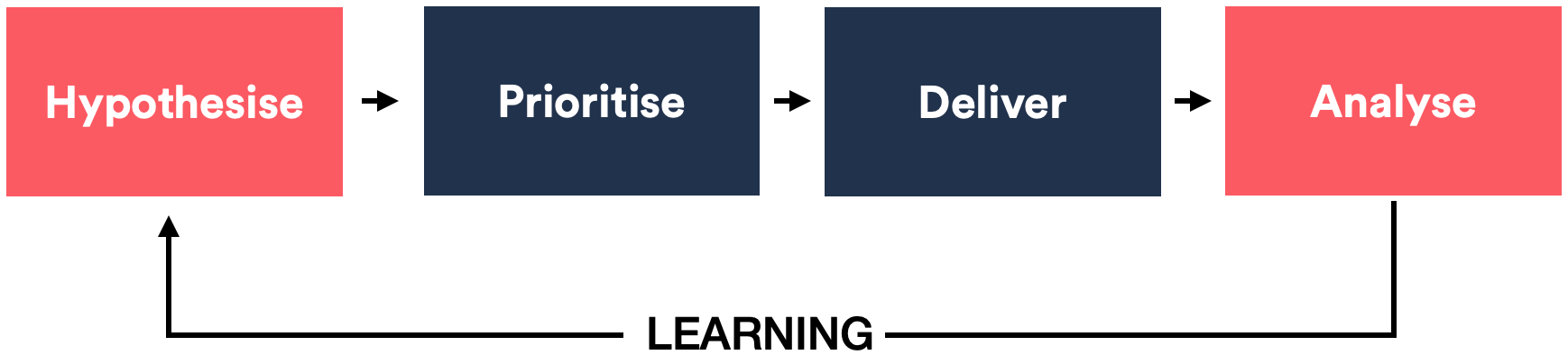

Testing big = multiple changes, sometimes across multiple pages. Sometimes necessary (e.g. low traffic, big redesign, de-risking a release), but it’s risky: if the test loses and we didn’t learn which change drove the result, we break the learning loop. The article gives a hypothesis-based strategy so we can bundle in a way that still supports learning.

- Useful hypothesis — Clear goal, how we’ll get there, and variables; add a justification tied to conversion levers (clarity, relevance, friction, etc.).

- List all variables being changed and turn them into hypothesis statements.

- Bundle with purpose — Necessary bundles (dependencies, hard-to-split), then “decided” bundles: by justification/theme, by experiment goal, quick wins first, and limits on how many changes per test.

- Secondary metrics are essential when bundling so we can learn which behaviours moved.

- MVT/Factorial — Possible when traffic is high enough; article discusses trade-offs (traffic split, multiple comparisons) and when iterated A/B bundles can be faster.

The article includes diagrams for variables, hypothesis template, bundling, and MVT vs sequential A/B, and stresses trading off learning/de-risking vs speed.